12 Dec 2025

IH: I've started working on the Orchestrator system. The basic idea is that the orchestrator will be given a sequence of states, each with its own runtime and optional tick behavior. Each state stores a small amount of metadata about itself and exposes three Action callbacks (onEnter, onUpdate, onExit) that can run any custom logic.

Right now, this is just the bare-bones foundation. Eventually, the Orchestrator will be wired into multiple other systems, and different states will trigger effects at specific times. The main challenge is to define something that's easy to author, while the Orchestrator handles all the repetitive timing and state-management work automatically.

I added the ability to pause, extend, and resume a state. I also just finally got over a really annoying limitation where boids would not cast shadows on each other. Now they do and it looks so good, working out some small bugs but it's a big improvement.

More updates soon!

8 Nov 2025

NP: I've been experimenting with different soundtracks for the sequencing of the swarm. I tried creating music tracks with Suno, which weren't bad but they didn't really convey the right mood. Also, people respond differently to various genres with their own associations, and that might not work. We decided, instead, to go with natural organic sounds: wind, water, fire, birdsong. Bringing in the elements seems in keeping with the original concept of a mysterious swarm in a vast desert expanse. This initial storyboard is really rough but includes some of the audio we'll use.

11 Oct 2025

A video update from Isaac with current progress, ideas and ongoing questions:

27 Sep 2025

NP: We had an interesting conversation today during our regular Displacement catchup to talk about the Salt Lines project. Our usual focus tends to be on technical aspects of projects but today the conversation turned philosophical. We talked about “awe”.

AWE:

“an emotion variously combining dread, veneration, and wonder that is inspired by authority or by the sacred or sublime.” (Merriam Webster)

“a feeling of reverential respect mixed with fear or wonder.” (Google)

The conversation was triggered by Isaac suggesting the need for music to drive the sequencing of the experience and to create mood, atmosphere and suspense. The music could help to inform: where and how the swarm of boids appears; the path it follows; how it moves; and, the relationship between the swarm and the visitor. As I’m going to be working on the music, I wanted to confirm how long the music/experience needs to be.

“How long does awe last?” Isaac asked.

I thought that was like asking how long is a piece of string, can it even be quantified. In any case, we googled it. According to our search for “how long does awe last?”, we got the following response:

The immediate, conscious feeling of awe is fleeting, often lasting only moments. However, the beneficial effects can last much longer, from a week to potentially longer.

The fleeting experience

Psychologically, the raw experience of an emotion is brief and ephemeral. During moments of awe, time may feel like it stops as you pause and reflect on something vast or extraordinary, but the emotional peak is short-lived. Researchers suggest that the neurophysiological shifts that accompany any emotion typically last no more than 30 minutes.

Lasting positive effects

Even though the initial feeling is brief, awe can have a significant and prolonged impact on your well-being [including] -

reduced stress:

Expanded sense of time

Shifted perspective

Greater life satisfaction

We discussed the philosophical and metaphysical implications of the swarm appearing. Why did it emerge at all. What controls the swarm, sending it out and calling it back again. There were no definite answers … we shifted to discussing what we’d like the visitor to experience: what do they see, hear, feel. What visual cues can we create, such as changes in the color of the sky that trigger movement. How can we create an immersive sensory experience that creates a feeling of awe. How can the experience convey the vastness and solitude of the desert, and the unexpected appearance of the swarm. How would interaction with the swarm feel, would it be like encountering and stroking a random cat … It was a great conversation that left us with more questions than answers.

We settled on 3’ 30” for now 😂

1 Aug 2025

JS: The terrain work for the project has been a really exciting part of the project so far. A lot of the inspiration came from Nazia’s desert journey — her videos and 360° photos of the environment were highly inspiring. To recreate a terrain similar to the photographs was the first step. We started with photogrammetry, hoping to build something directly from those visuals. Unfortunately, there just wasn’t enough data to get a usable result.

After that, we discussed Unity’s terrain builder, but Isaac pointed out some compatibility issues with web-based platforms. So, we shifted gears again and gave InfraWorks a try. We downloaded a sample terrain and ran a test import. The outcome wasn’t perfect, but it definitely showed promise. That small win gave us the push we needed to keep going. On a team call, we decided to look for terrain data with the right topography and details — something closer to what we saw in Nazia’s images. We started scouting areas in West Texas, near the Chihuahuan desert, and managed to identify a few spots that looked promising.

Now we wait to see how those work out. Fingers crossed!

Acacia Tree, Tissardmine, Morocco

Tissardmine, Morocco

18 Jul 2025

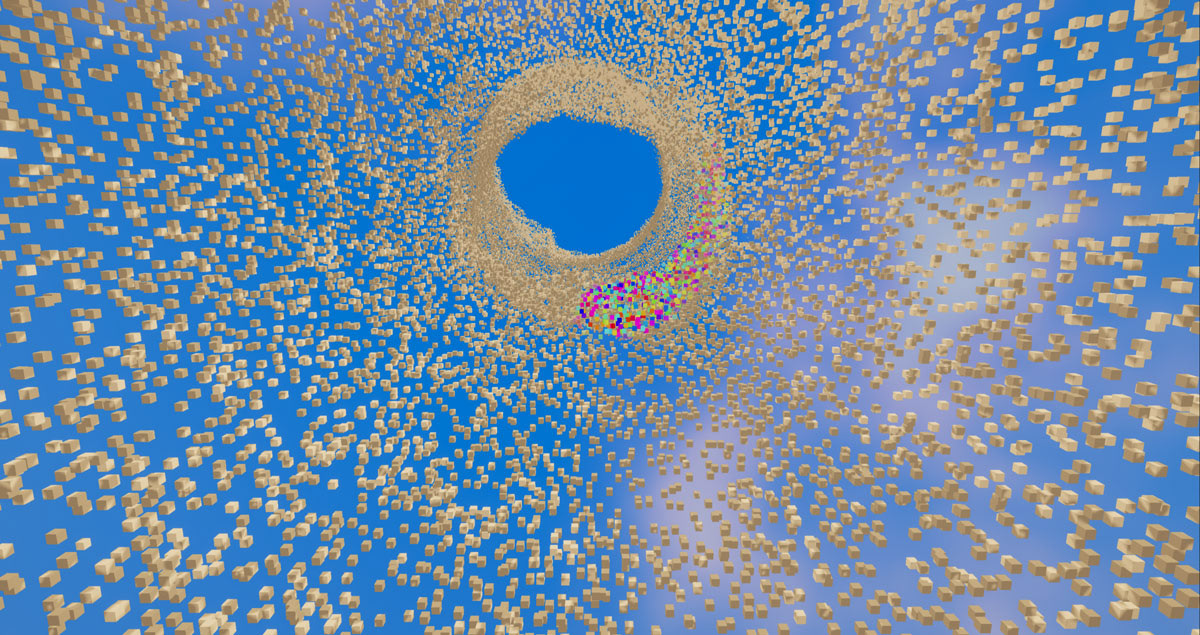

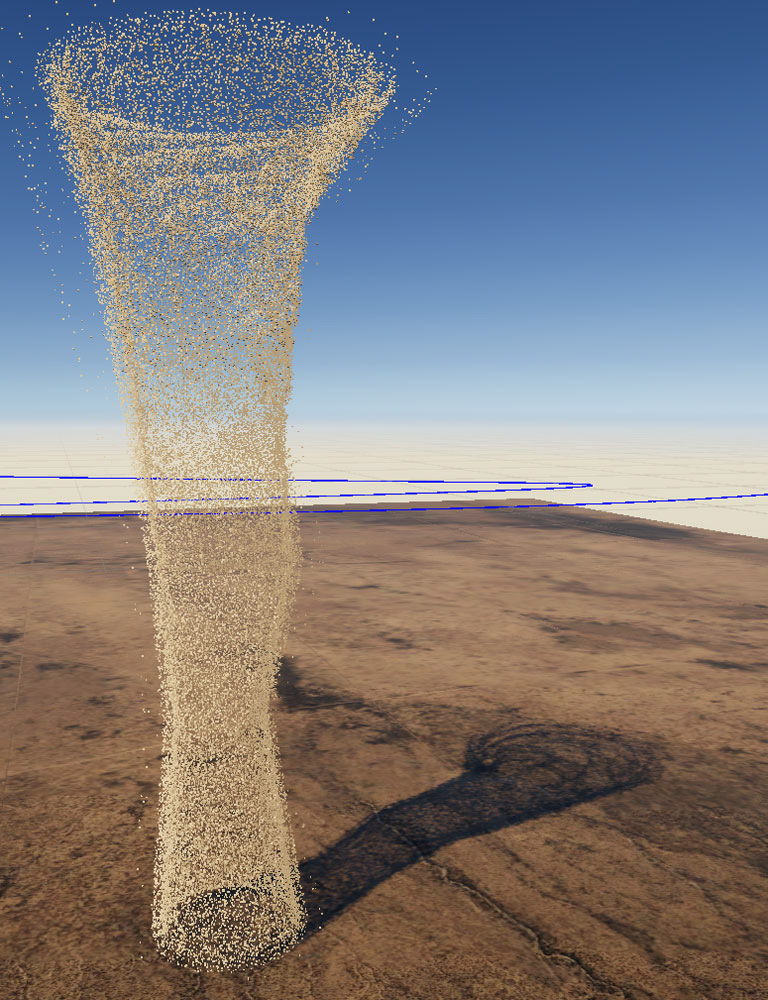

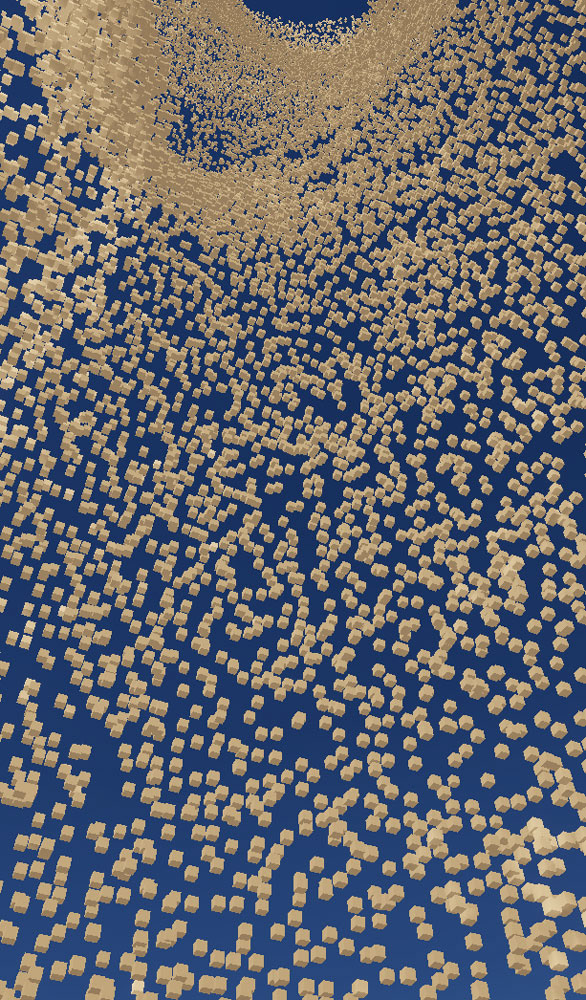

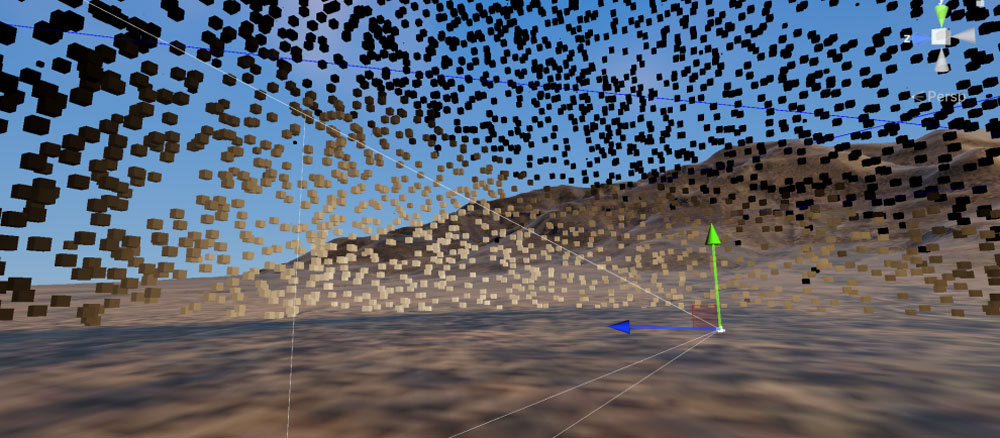

IH: For Salt Lines, the work I did involved creating a system of boids where we could render multiple thousands of boids within a WebGPU environment, in order to distribute this experience over the web with no download.

I started by naively for-looping over the boids to see how much we could get. Performance wasn’t great, but we had flocking, avoidance, and cohesion working. Then I went to the Burst compiler, and suddenly had multiple thousands running at 100+ FPS. The next milestone was teaching them to follow a target, and then offsetting each boid so they could form a shape: a ship, whale, or lion. That was a big win.

Exporting to WebGPU, however, broke Burst, so I had to step back and rebuild everything using shaders. I converted the CPU-bound logic into a ping-pong shader system, where the GPU computes all the boid states into textures. Suddenly the few thousand boids I had were running at hundreds of FPS.

I pushed the system as far as I could, and I was able to get up to 65,500 boids rendering at over 100 FPS in the web browser. That was a huge victory.

Now the goal is to reintroduce mesh-based offsets into the shader system, so the swarm can form shapes again and follow a central game object. Eventually, we want users to be able to manipulate that object themselves — guiding a massive swarm across the landscape in VR.”

WebGPU is so new that it comes with strange limits. Unity’s terrain system immediately hits WebGPU’s texture/shader cap, which I believe is set by the browser. A single diffuse layer works, but as soon as you add normals or speculars, it stops rendering. It’s one of those challenges that comes with pushing bleeding-edge technology.

15 Jul 2025

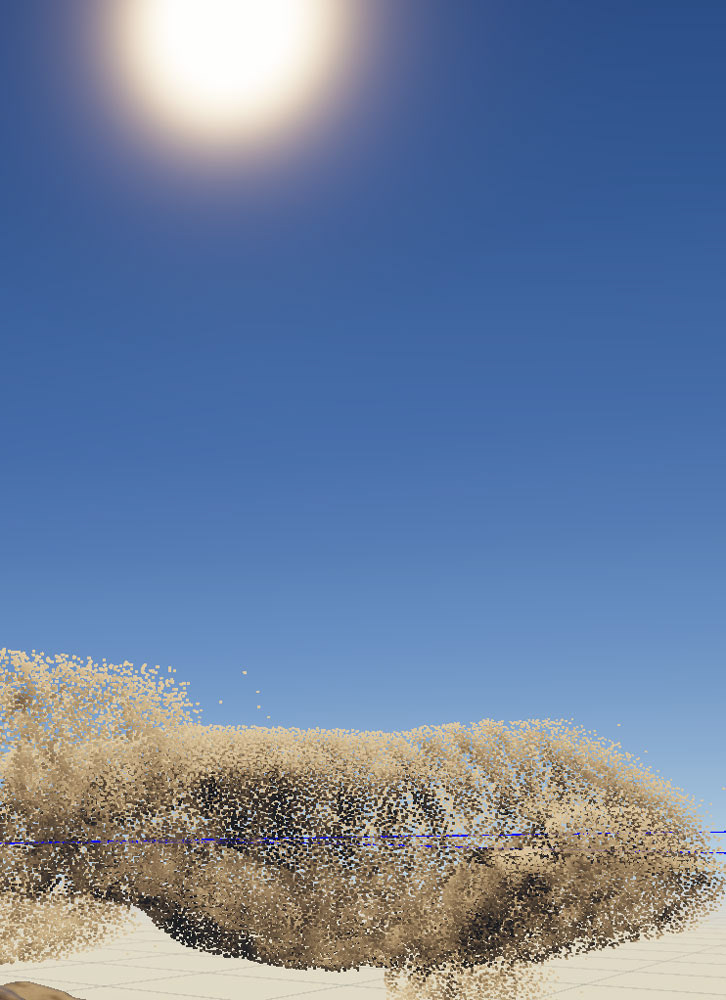

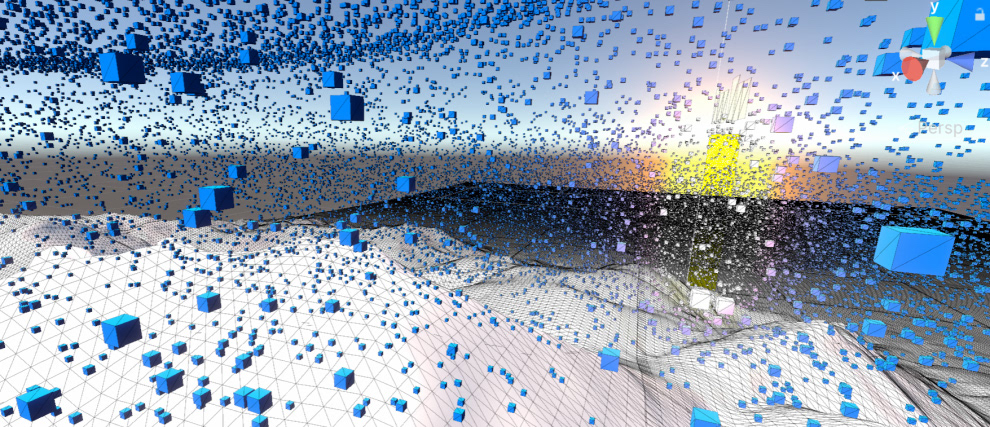

NP: The second project we’ve started is called Salt Lines — an immersive VR experience. This was inspired by my recent art residency in the Moroccan desert. I imagined a massive point cloud, or swarm, made up of blue cubes swirling above the minimal desert landscape. We’re taking this initial concept and bringing it to life using Isaac’s programming and Justin’s 3d design skills. It’s been great to collaborate on developing the idea and really thinking about how to create organic movement, transformation and interaction.

During research on ‘swarms’, I came across two references that are super interesting. The first is a YT video called “Swarm Intelligence: The Power of the Collective Mind” and the second is a series of photographs of starling swarms by Danish photographer Søren Solkær, definitely worth checking out both.

Above: Images/Video created with Midjourney

Early concept sketch

Early concept sketch

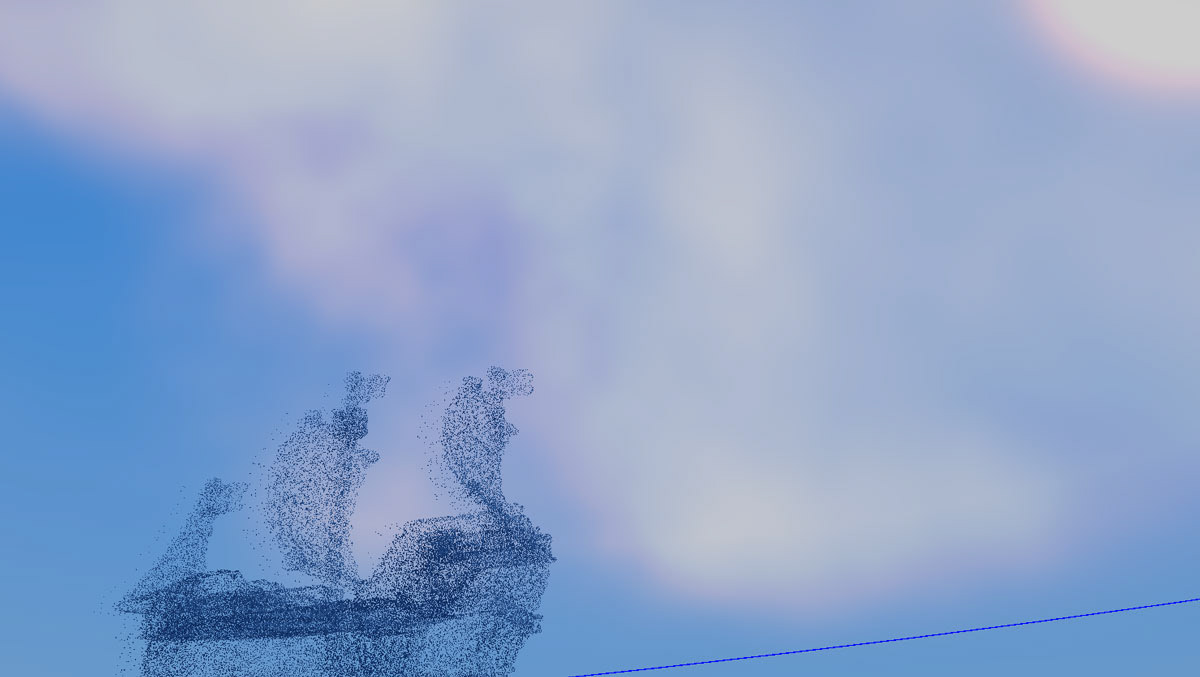

12 Jul 2025

NP: It’s been great to regroup again after the successful completion of the Displacement Doorway project last year! … We’re coming together again across 3 different timezones: Austin, Atlanta and Stockholm. We have a couple of projects on the go.

The first is a quick installation with a Kinect, (revisiting a project from my Masters program), where we’re mapping a point cloud to a live feed. Isaac helped to update the Processing code and get it all running with the Kinect2. It’s about connecting text with spatial data - literally poetry in motion! We’re organizing a live dance performance to demo this in action, more to come on that … here are a few images of WiP -